December 5, 2023

The Magic of Animation: How MagicAnimate Advances Human Image Animation

Human image animation aims to create realistic videos of people by animating a single reference image. However, existing techniques often struggle with maintaining fidelity to the original image and smooth, consistent motions over time. Enter MagicAnimate – an open source image animation AI that leverages the power of diffusion models to overcome these challenges.

Created by a team of researchers seeking to enhance video generation quality, MagicAnimate incorporates novel techniques to improve temporal consistency, faithfully preserve reference image details, and increase overall animation fidelity. At its core is a video diffusion model that encodes temporal data to boost coherence between frames. This works alongside an appearance encoder that retains intricate features of the source image so identity and quality are not lost. Fusing these pieces enables remarkably smooth extended animations.

Early testing shows tremendous promise – significantly outperforming other methods on benchmarks for human animation. On a collection of TikTok dancing videos, MagicAnimate boosted video fidelity over the top baseline by an impressive 38%! With performance like this on complex real-world footage, it’s clear these open source models could soon revolutionize the creation of AI-generated human animation.

We’ll dive deeper into how MagicAnimate works and analyze the initial results. We’ll also explore what capabilities like this could enable in the years to come as the technology continues to advance.

How MagicAnimate Works

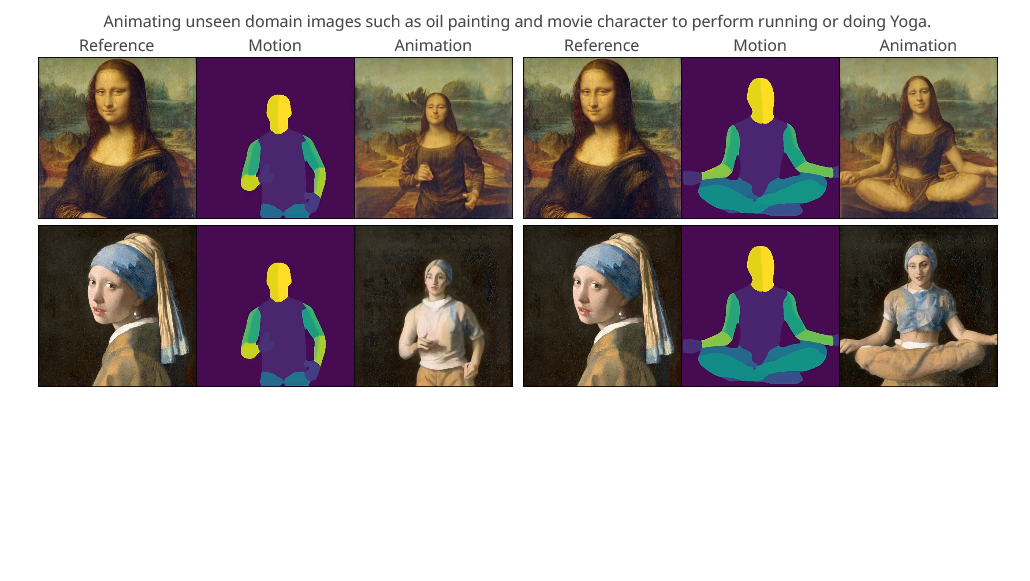

MagicAnimate is a diffusion-based framework designed for human avatar animation with a focus on temporal consistency. It effectively models temporal information to enhance the overall temporal coherence of the animation results. The appearance encoder not only improves single-frame quality but also contributes to enhanced temporal consistency. Additionally, the integration of a video frame fusion technique enables seamless transitions across the animation video. MagicAnimate demonstrates state-of-the-art performance in terms of both single-frame and video quality, and it has robust generalization capabilities, making it applicable to unseen domains and multi-person animation scenarios.

Diagram the MagicAnimate paper, Given a reference image and the target DensePose motion sequence, MagicAnimate employs a video diffusion model and an appearance encoder for temporal modeling and identity preserving, respectively (left panel). To support long video animation, we devise a simple video fusion strategy that produces smooth video transition during inference (right panel).

Comparing to Animate Anyone

MagicAnimate and Animate Anyone both belong to the realm of text-to-image and text-to-video generation, leveraging diffusion models to achieve superior results. However, they exhibit distinctive approaches in their methodologies and applications.

1. Image Generation Backbone:

- MagicAnimate: The framework predominantly employs Stable Diffusion as its image generation backbone, emphasizing the generation of 2D optical flow for animation. It utilizes ControlNet to condition the animation process on OpenPose keypoint sequences and adopts CLIP to encode the reference image into a semantic-level text token space, guiding the image generation process through cross-attention.

- Animate Anyone: In contrast, Animate Anyone focuses on the diffusion model for image generation and highlights the effectiveness of diffusion-based methods in achieving superior results. It explores various models, such as Latent Diffusion Model, ControlNet, and T2I-Adapter, to strike a balance between effectiveness and efficiency. It delves into controllability by incorporating additional encoding layers for controlled generation under various conditions.

2. Temporal Information Processing:

- MagicAnimate: While MagicAnimate produces visually plausible results, it tends to process each video frame independently, potentially neglecting the temporal information in animation videos.

- Animate Anyone: Animate Anyone draws inspiration from diffusion models’ success in text-to-image applications and integrates inter-frame attention modeling to enhance temporal information processing. It explores the augmentation of temporal layers for video generation, with approaches like Video LDM and AnimateDiff introducing motion modules and training on large video datasets.

3. Controllability and Image Conditions:

- MagicAnimate: MagicAnimate conditions the animation process on OpenPose keypoint sequences and utilizes pretrained image-language models like CLIP for encoding the reference image into a semantic-level text token space, enabling controlled generation.

- Animate Anyone: Animate Anyone explores controllability extensively, incorporating additional encoding layers to facilitate controlled generation under various conditions such as pose, mask, edge, depth, and even content specified by a given image prompt. It proposes diffusion-based image editing methods like ObjectStitch and Paint-by-Example under specific image conditions.

While both MagicAnimate and Animate Anyone harness diffusion models for text-to-image and text-to-video generation, they differ in their choice of image generation backbones, their treatment of temporal information, and the extent to which they emphasize controllability and image conditions. MagicAnimate puts a strong emphasis on Stable Diffusion and cross-attention guided by pretrained models, while Animate Anyone explores a broader range of diffusion models and integrates additional encoding layers for enhanced controllability and versatility in image and video generation.

Closing Thoughts

As AI-powered image animation continues advancing rapidly, the applications are becoming increasingly versatile but may also raise ethical concerns. While MagicAnimate demonstrates promising results, generating custom videos of people requires careful consideration.

Compared to recent sensation AnimateAnyone which produced very realistic animations, MagicAnimate does not yet achieve the same level of fidelity. However, the results here still showcase meaningful improvements in consistency and faithfulness to the source.

As the code and models have not yet been opened sourced, the degree to which the demo videos reflect average performance remains unclear. It is common for research to highlight best-case examples, and real-world results vary. As with any machine learning system, MagicAnimate likely handles some examples better than others.

Nonetheless, between AnimateAnyone, MagicAnimate and other recent papers on AI-powered animation, the pace of progress is staggering. It’s only a matter of time before generating hyper-realistic animations of people on demand becomes widely accessible. And while that enables creative new applications, it also poses risks for misuse and mistreatment that could violate ethics or consent.

As this technology matures, maintaining open and thoughtful conversations around implementation will be critical. But with multiple strong approaches now proven, high-quality human animation powered by AI appears inevitable.