October 3, 2023

Google DeepMind’s Advances in General-Purpose Robotics Learning

In our increasingly interconnected world, robots excel as specialists but lag behind as generalists. To address this, Google DeepMind, in collaboration with 33 academic labs, have developed an innovative set of resources aimed at advancing general-purpose robotic learning. This blog post explores the strides Google DeepMind has made in this field, with a focus on the Open X-Embodiment dataset and RT-1-X robotics transformer model.

The Dawn of Robotic Generalists: Open X-Embodiment dataset and RT-1-X model

Traditionally, robots are trained per task, per robot type, and per environment with variations requiring retraining from scratch. Google DeepMind, in a game-changing move, has released resources that can significantly enhance a robot’s ability to learn across different types. These resources include the Open X-Embodiment dataset and the RT-1-X robotics transformer model.

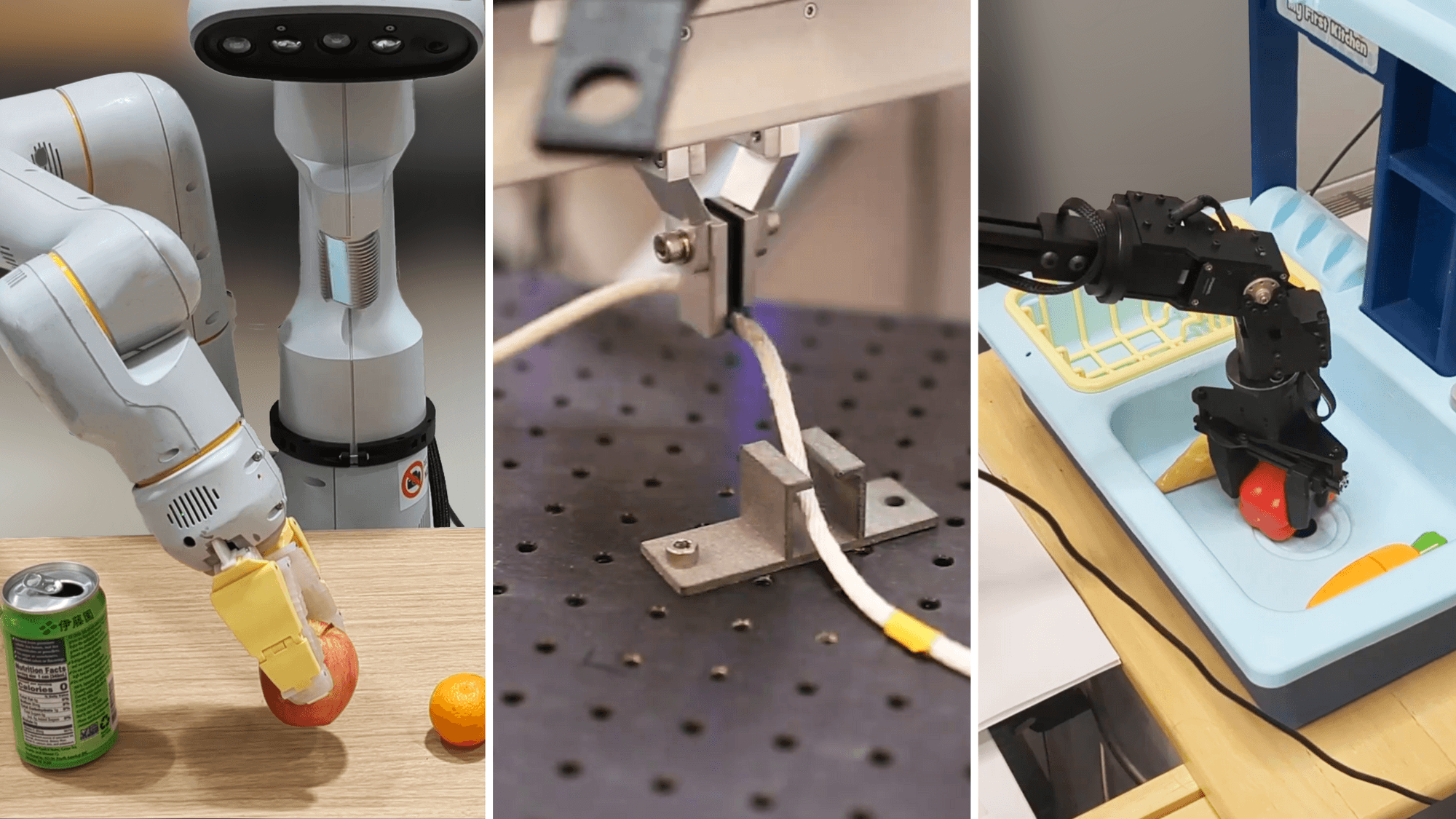

Through pooling data from 22 robot types, Google DeepMind and its partners have been able to create a highly diverse dataset. This led to the development of the RT-1-X model, trained on this dataset, which displays skill transfer across various robot embodiments.

A Leap Forward in Performance: The Benefits of Multiple Embodiment Training

Training a single model using data from numerous embodiments results in improved performance across many robots, compared to models trained on data from individual embodiments. This fact was confirmed when the RT-1-X model was tested in five different research labs, yielding a 50% success rate improvement on average across five commonly-used robots. Additionally, the RT-2 visual language action model tripled its performance on real-world robotic skills when trained on data from multiple embodiments.

Open X-Embodiment Dataset: A Step Towards Robotic Mastery

The Open X-Embodiment dataset, comprising data from 22 robot embodiments, is a critical step towards training a generalist model capable of controlling various types of robots. This dataset, created in collaboration with over 20 academic research institutions, is a monumental achievement and the most comprehensive robotics dataset to date.

Introducing RT-X: A General-Purpose Robotics Model

The RT-X model combines two of Google DeepMind’s robotics transformer models, demonstrating how a diverse, cross-embodiment dataset enables significantly improved performance. In tests, the RT-1-X model trained with the Open X-Embodiment dataset outperformed the original model by 50% on average.

Emergent Skills in RT-X

Experiments demonstrated that co-training with data from different platforms imbues the RT-2-X model with additional skills not present in the original dataset. This equips it to perform novel tasks, demonstrating the power of diverse training data.

The Emergent Skills in RT-X are skills that the RT-2-X model was not capable of previously, but was able to learn by combining data from other robots into the training. The RT-2-X model was three times as successful as the previous best model for emergent skills, demonstrating that combining data from other robots into the training improves the range of tasks that can be performed even by a robot that already has large amounts of data available. This is relevant to the topic because it shows how combining data from multiple embodiments can lead to better performance across many robots than those trained on data from individual embodiments, and how this can lead to the development of more useful helper robots by scaling learning with more diverse data and better models[1].

Responsible Advancements in Robotic Research

Google DeepMind’s work shows that models which generalize across embodiments can lead to significant performance improvements. Future research could explore combining these advancements with self-improvement aspects to enable the models to improve with their own experience. Another potential area of exploration could be how different dataset mixtures may affect cross-embodiment generalization.

By advancing robotics research in an open and responsible manner, we are one step closer to a future where general-purpose robots make our lives easier, more efficient, and more enjoyable.

Closing Thoughts

The work being done by Google DeepMind and its partners represents an unprecedented paradigm shift in general-purpose robotics. By developing datasets and models that can generalize across many robot embodiments, they’re not only enhancing robotic performance but significantly broadening the range of achievable tasks. This advancement in technology has vital implications for various industries, from healthcare to manufacturing and beyond. Moreover, as general-purpose robots become more capable, we can anticipate a considerable positive impact on societal productivity and efficiency. We stand at the cusp of a future where robots can seamlessly adapt to an array of tasks, transforming not only the way we work but also how we live.