June 1, 2025

Deepseek releases 0528 Update

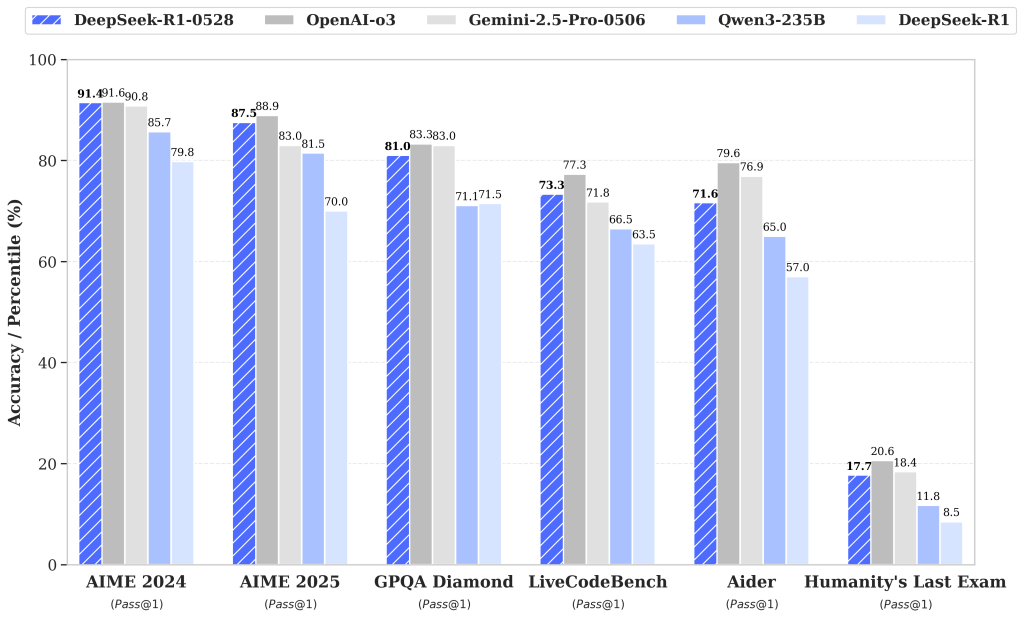

DeepSeek AI’s latest update – DeepSeek R1-0528 (released May 28, 2025) – has turned heads across the AI community. This “minor” version bump actually brings major performance leaps and new features. According to DeepSeek’s own report, R1-0528 has dramatically improved its reasoning depth and reliability by “leveraging increased compute and new post-training optimizations”. Benchmarks show it now rivals (and in some cases even exceeds) the performance of top proprietary models like OpenAI’s GPT-4o (o3) and Google’s Gemini 2.5 Pro. Remarkably, this all comes in an open source package (MIT license) – a 685-billion parameter Mixture-of-Experts model with a 128K token context window. The chart below (from DeepSeek’s eval) highlights R1-0528 (striped bars) against OpenAI, Google, and others: it ties or beats them on hard reasoning tasks like math contests and coding challenges while leaving its predecessor far behind:

Figure: DeepSeek R1-0528 (blue stripes) compared to leading models (solid bars) on various benchmarks. R1-0528 generally matches or exceeds OpenAI’s o3 and Google’s Gemini 2.5 Pro, with huge improvements over the prior R1 release.

Performance Leaps: Closing the Gap with GPT-4

DeepSeek’s evaluation tables back up the bold claims. Simple general knowledge metrics (like MMLU) improve modestly (e.g. MMLU-Redux EM went from 92.9% to 93.4%), but hard reasoning and coding scores skyrocketed. For example, on the challenging AIME math contest, R1’s accuracy jumped from 70.0% to 87.5%. In fact, DeepSeek reports that R1-0528 now “uses nearly twice as many tokens per question” (23K vs 12K) and “deep chain-of-thought reasoning” to reach that score. Other math tests (AIME 2024, CNMO 2024, etc.) saw similar big gains (e.g. AIME 2024: 79.8%→91.4%). Coding benchmarks also surged: LiveCodeBench accuracy rose (63.5→73.3), Codeforces difficulty jumped (rating 1530→1930), and problem-solving (Aider-Polyglot) went from 53.3% to 71.6%. Even unusual tasks like the “Humanity’s Last Exam” (a logic puzzle) doubled from 8.5% to 17.7%. In summary, every major domain – math, code, logic – shows double-digit improvement. As one analysis notes, R1-0528 “vaulted into the upper echelon of current AI models” and “is the most powerful among open-weight models”, approaching the leading closed-source LLMs.

Under the Hood: Massive Scale and New Optimizations

How did DeepSeek pull this off? R1 0528 is enormous: the full model has 685 billion parameters, implemented as a Mixture of Experts (MoE) so that only about 37B parameters are active per request. It was trained with massive compute and then fine-tuned with new “posttraining optimizations.” The context window is also huge—up to 128,000 tokens—enabling much longer chain-of-thought than most models. (By comparison, GPT-4o’s context is around 1M in newer variants, but 128K is still far above the typical 32K or 64K in many models.)

Importantly, despite the size, the weights are fully open source (MIT license). This means anyone can study the model, fine-tune it, or run it locally. DeepSeek even lists support for multiple tensor formats (BF16, F8_E4M3 8-bit, etc.) and provides code to run R1 on local hardware.

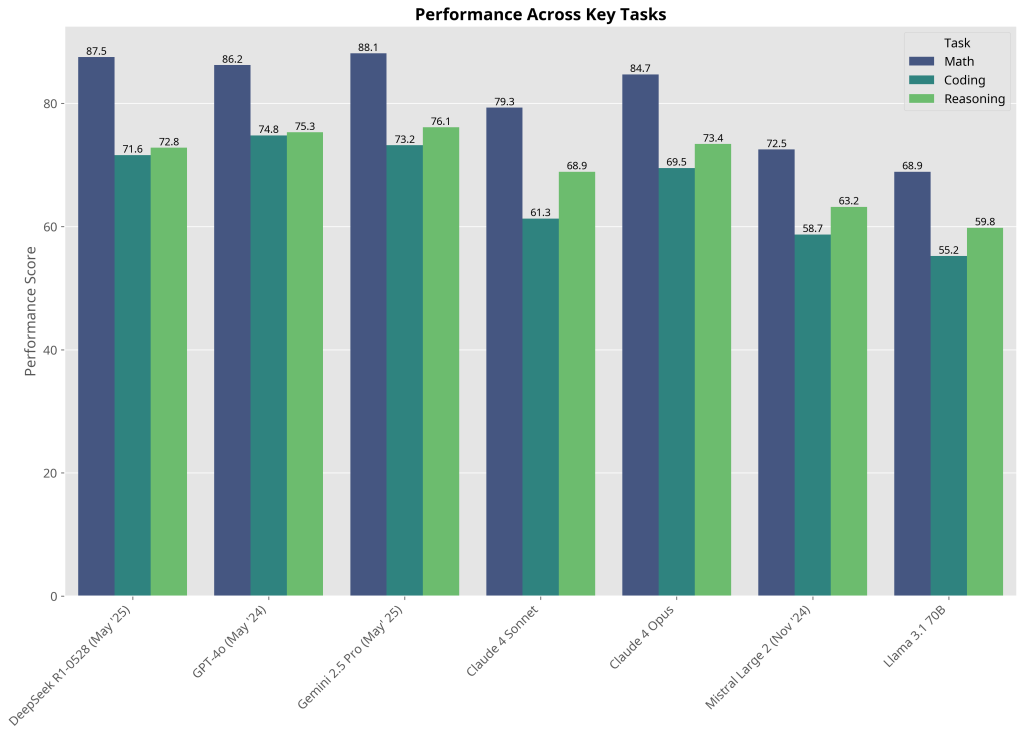

One external summary highlights that R1 0528 “is a serious competitor to top-tier proprietary models … maintaining the cost-efficiency and accessibility of open source development.” (In fact, the original R1 was reportedly built for under $6 million, and its results “shook the ‘spend more for better AI’ paradigm” by rivaling billion-dollar models cheaply.)

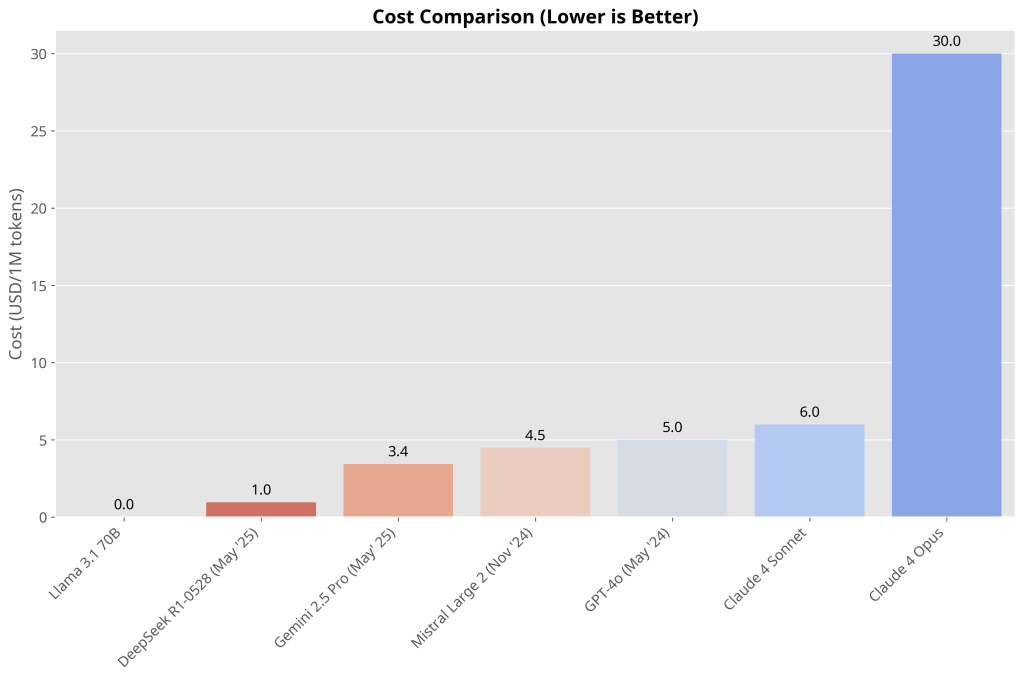

At $0.96 per million tokens, DeepSeek offers significant savings compared to GPT-4o ($5.00), Gemini 2.5 Pro ($3.44), and Claude models.

New Capabilities: Fewer Hallucinations and API Friendliness

| Benchmark | R1-0528 | Previous R1 | Improvement |

|---|---|---|---|

| AIME Math Contest | 87.5% | 70.0% | +17.5% |

| LiveCodeBench | 73.3% | 63.5% | +9.8% |

| Aider-Polyglot | 71.6% | 53.3% | +18.3% |

| Humanity’s Last Exam | 17.7% | 8.5% | +109% |

| Parameters | 685B (MoE) | ||

| Active Params/Query | 37B | ||

| Context Window | 128K tokens | ||

| License | MIT | ||

| 685B → 8B Model | Matches Qwen3-235B on AIME | ||

| Performance Gain | +10% over baseline 8B | ||

| Hardware Requirement | ~20GB RAM | ||

| ✅ Reduced Hallucinations | ✅ Enhanced Function Calling | ||

| ✅ Structured JSON Output | ✅ “Vibe Coding” Support |

Beyond raw scores, R1-0528 adds several practical features. DeepSeek explicitly notes a “reduced hallucination rate” in this update, addressing a common issue in LLMs. It also “enhanced support for function calling” and JSON output, aligning with modern LLM APIs. In plain terms, R1-0528 can now structure its answers in JSON and invoke user-defined functions more reliably – critical for developers building apps on top of the model. The release notes tout these as “front-end capabilities,” meaning the model plays nicer with chat interfaces and coding assistants (DeepSeek calls it “vibe coding” support). These features put R1-0528 more on par with GPT-4 and Claude, which also offer function-calling APIs. All of this makes the model surprisingly “user-friendly” for such a new release: no change in basic API usage (you still prompt it like before), but you gain new output modes.

Test the R1 Update

Given this prompt:

Design a Simple Analog Clock Showing a Specific Time as an SVGThis tests math skills (angles), layout, and ability to translate time into visual elements.

Deepseek output:

<svg xmlns="http://www.w3.org/2000/svg" viewBox="0 0 200 200">

<!-- Clock face -->

<circle cx="100" cy="100" r="95" fill="#f0f8ff" stroke="#333" stroke-width="2"/>

<!-- Hour markers -->

<g stroke="#333" stroke-width="2">

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(30 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(60 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(90 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(120 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(150 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(180 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(210 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(240 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(270 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(300 100 100)"/>

<line x1="100" y1="15" x2="100" y2="25" transform="rotate(330 100 100)"/>

<!-- 12 o'clock marker (thicker) -->

<line x1="100" y1="10" x2="100" y2="25" stroke-width="3"/>

</g>

<!-- Hour hand (10:10) -->

<line x1="100" y1="100" x2="100" y2="60"

stroke="#333" stroke-width="6" stroke-linecap="round"

transform="rotate(305 100 100)"/>

<!-- Minute hand (10 minutes) -->

<line x1="100" y1="100" x2="100" y2="30"

stroke="#666" stroke-width="4" stroke-linecap="round"

transform="rotate(63 100 100)"/>

<!-- Second hand (30 seconds) -->

<line x1="100" y1="100" x2="100" y2="20"

stroke="#e74c3c" stroke-width="2" stroke-linecap="round"

transform="rotate(180 100 100)"/>

<!-- Center cap -->

<circle cx="100" cy="100" r="6" fill="#333"/>

<circle cx="100" cy="100" r="3" fill="#e74c3c"/>

</svg>Key Features:

Time Display: Shows exactly 10:10:30

Hand Calculations:

Hour hand: 305° (10 hours + 10/60 hours = 305°)

Minute hand: 63° (10 minutes + 30/60 minutes = 63°)

Second hand: 180° (30 seconds × 6°/sec = 180°)This is a perfect output showcasing the model’s ability to translate abstract concepts like time into accurate and well-structured visual representations. It demonstrates spatial reasoning, precise use of geometry, and a solid understanding of SVG syntax.

Distillation Magic: A Tiny Model with Big-Brain Logic

Perhaps most surprising is that DeepSeek has distilled R1 0528’s reasoning into much smaller models. They report fine-tuning an 8B parameter version (Qwen3 8B base) using R1’s chain-of-thought data. The result—DeepSeek R1 0528 Qwen3 8B—achieves state-of-the-art open-source performance on benchmarks like AIME. In fact, this compact 8B model outperforms the vanilla 8B baseline by over 10% on AIME 2024 and matches the score of the much larger Qwen3 235B model.

In practical terms, an 8B model can now perform as if it had dozens of times more parameters, simply by inheriting DeepSeek’s reasoning strategy. This suggests that the structure of R1’s knowledge—its chain-of-thought reasoning—is extremely powerful and transferable. It also means that advanced reasoning is no longer confined to massive data centers: a distilled 8B model can run on a single high-end GPU.

Running R1 Locally: Democratizing High-End AI

Because the weights are open, R1-0528 can actually be run on community hardware. The official Hugging Face repo has the full model (split across ~163 safetensor files, totalling hundreds of GB). The community has produced manageable formats and guides: for example, one enthusiast notes that the 8B distilled model runs with only ~20 GB of RAM, delivering around 8 tokens/sec on a 48 GB GPU. (By contrast, the full 685B model is enormous – one posted GGUF is ~52 GB compressed – requiring beefy machines.) Nonetheless, even the big model can be hosted on inference services, and DeepSeek provides a how-to for local deployment. In short, anyone can spin up R1-0528 or its smaller sibling, a far cry from early GPT-4 days. This openness is a core part of DeepSeek’s strategy: as their docs emphasize, R1’s code and weights “support commercial use” and even allow derivative works like more distillations.

Why R1-0528 Matters

DeepSeek’s R1-0528 update is turning heads with its 87.5% accuracy on the AIME 2025 math test, putting it in league with top models like OpenAI’s o3 and Google’s Gemini 2.5 Pro. The update introduces enhanced reasoning, function calling, JSON output, and better context handling—all while remaining completely free and open. Many see this as more than just a version bump; it’s a signal that open models can now compete seriously with the biggest names in AI.

Beyond raw performance, R1-0528 is gaining traction through integrations with Ollama’s Thinking Mode, DeepSeek Engineer v2, and Taskmaster, while rising to #11 on the Context Arena leaderboard. Although some caution that it’s simply catching up, the sentiment around its accessibility, speed, and transparency is overwhelmingly positive. It’s a clear step forward in open AI, powerful enough for serious work, lightweight enough for broad adoption, and free enough to shift expectations

Sources: Release notes and model card from DeepSeek; official DeepSeek API news post; third-party analyses and benchmarks; community reports on local usage. These show the performance numbers, new features, and open-source availability summarized above.

Try it yourself: Play with DeepSeek-R1-0528 on Hugging Face ↗