OpenAI has introduced a new Assistants API that allows developers to build powerful, customizable AI assistants. Currently in beta, the API gives developers the ability to leverage OpenAI models and tools to create assistants capable of conversing, retrieving knowledge, generating content, and more. The key features of the Assistants API include:The ability to tune an assistant’s personality and capabilities by providing specific instructions when calling OpenAI models like GPT-3.

This allows for highly personalized assistants.Access to multiple OpenAI tools in parallel, such as Codex for code generation and the Knowledge API for question answering. Developers can also integrate their own custom tools.Persistent chat threads that store conversation history to provide assistants with context. Messages can be appended to threads as users reply. Support for files in multiple formats that assistants can reference during conversations. Assistants can also generate files like images during conversations. By leveraging the advanced natural language capabilities of models like GPT-3, the Assistants API enables developers to quickly build and iterate on AI assistants with custom personalities and advanced functionality. The API is still in active development, so developer feedback is critical at this early stage.

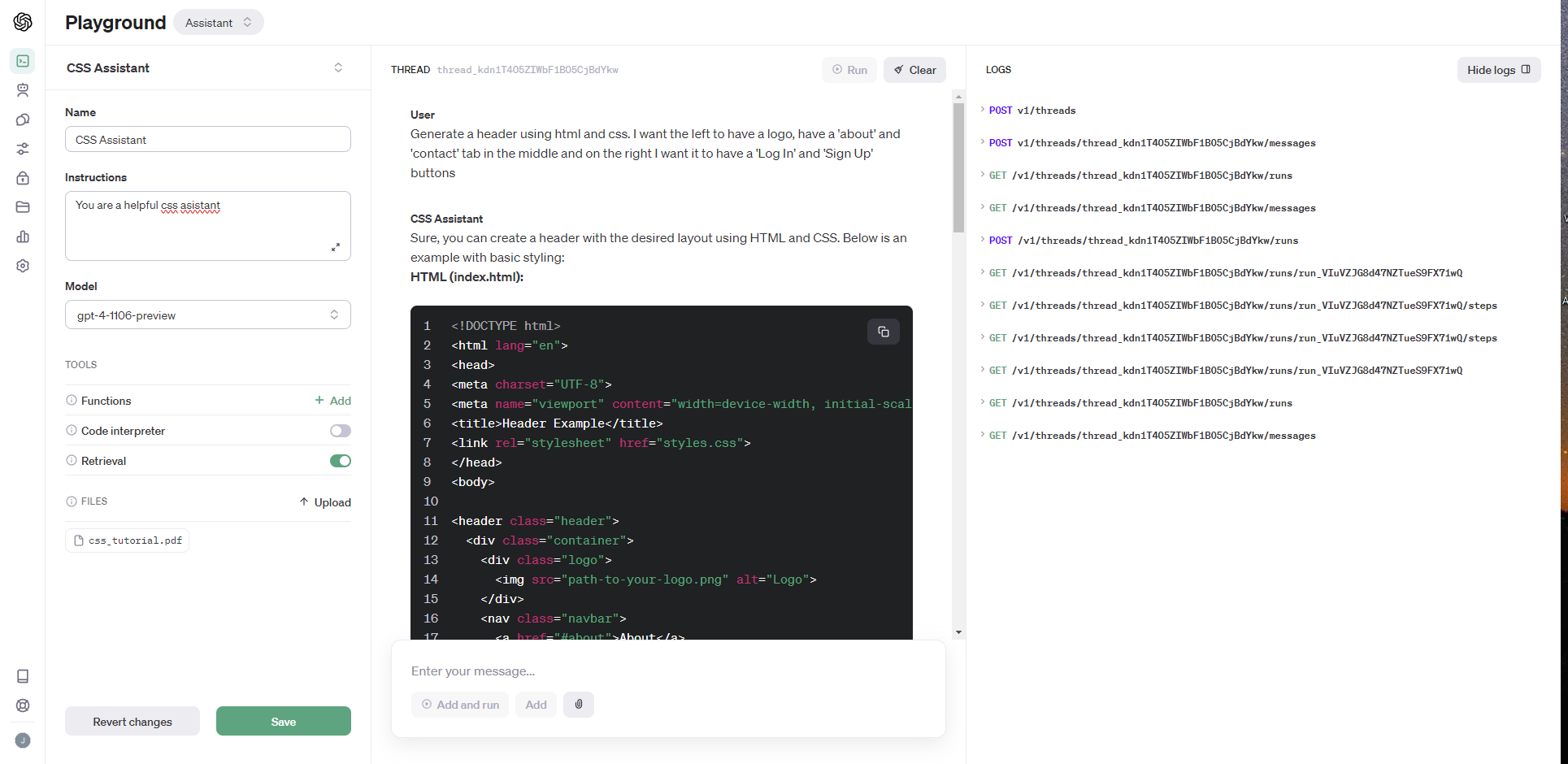

Creating An Assistant

As of now you can create an assistant through the OpenAI playground or via their api. Here are the steps to create an assistant.

To get started, creating an Assistant only requires specifying the model to use. But you can further customize the behavior of the Assistant:

- Use the

instructionsparameter to guide the personality of the Assistant and define it’s goals. Instructions are similar to system messages in the Chat Completions API. - Use the

toolsparameter to give the Assistant access to up to 128 tools. You can give it access to OpenAI-hosted tools likecode_interpreterandretrieval, or call a third-party tools via afunctioncalling. - Use the

file_idsparameter to give the tools likecode_interpreterandretrievalaccess to files. Files are uploaded using theFileupload endpoint and must have thepurposeset toassistantsto be used with this API.

AI Assistant Example

A developer could leverage the Assistants API to create a customized CSS assistant. The assistant could be given a PDF containing CSS documentation and tutorials to read, allowing it to gain an understanding of CSS through natural language processing. When conversing with users, it could then tap into this knowledge to provide guidance on CSS syntax, explain concepts, and answer questions. The assistant could leverage GPT-4 to generate human-like explanations and Codex to provide code examples. Persistent threads would allow it to follow extended conversations and reference previous context. Overall, the assistant could serve as a knowledgeable CSS guide, providing users with a natural way to learn and get help with CSS.

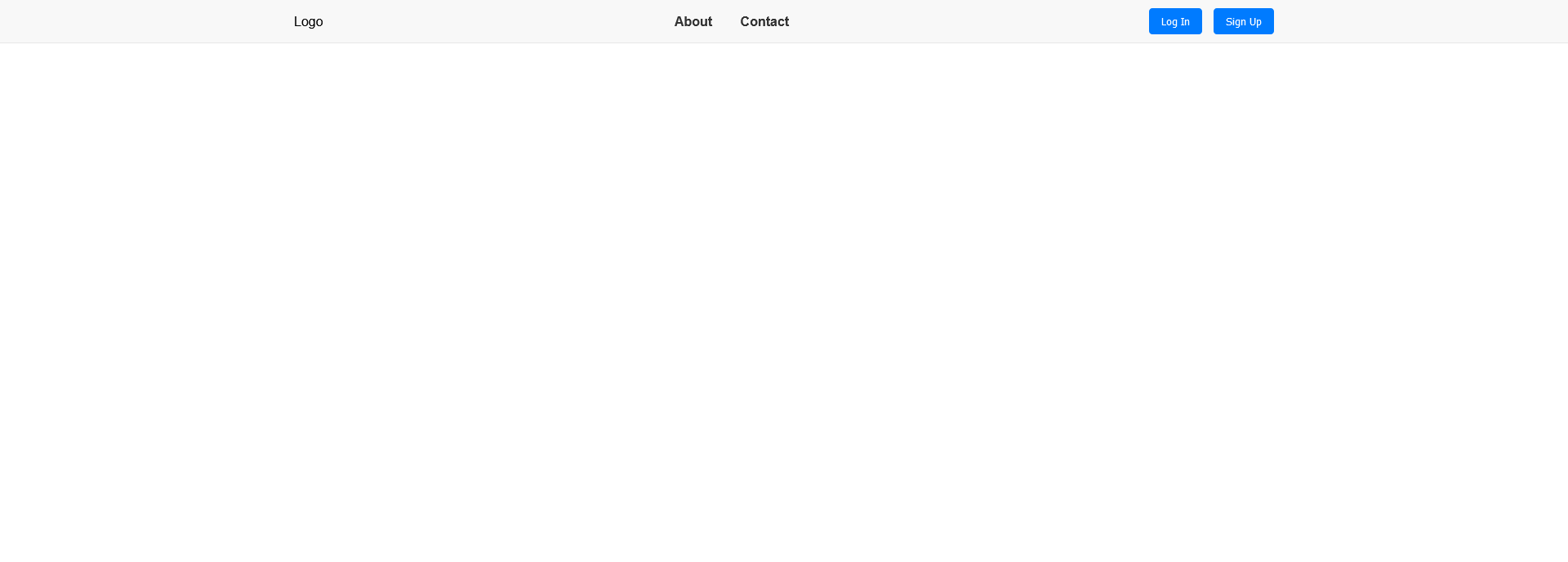

As you can see on the left sidebar you are given your assistant’s info along with its setting. In our case I asked our CSS Assistant to create a header and gave it specific details.

Not a bad looking header, it was able to get everything correct. This is far better than before, where language models really seemed to struggle with CSS. You could do this with any documentation. Just note that you can attach a maximum of 20 files per Assistant, and they can be at most 512 MB each, although I wouldn’t be surprised if this started to change soon allowing for more upload.

Closing Thoughts

While the new Assistants API represents an exciting advancement in building customized AI assistants, it’s important to note that this feature is still in the early stages. Currently, access is mainly available through the API itself, although OpenAI plans to start rolling it out to Plus users this week.

Even in beta, the capabilities enabled by the API provide a glimpse of the potential to create truly helpful and specialized AI assistants. As the product develops further, we may see OpenAI open up additional ways for developers and businesses to build on top of their models and tools.

Given OpenAI’s emphasis on creating an ecosystem, there are even possibilities down the line that developers could monetize custom assistants. For now, feedback from early adopters will be critical to shaping the product as the team continues active development. While we’re still just scratching the surface, the new Assistants API marks an important step towards OpenAI’s vision for customized and accessible AI.